Data-Driven Product Strategy: How to Implement AI Modules That Actually Impact P&L

Engineering teams tend to optimize everything for technical metrics. Business leaders focus on revenue growth, cost reduction, and margin improvement. Meanwhile, building AI modules that actually impact profit and loss requires data-driven solutions and decisions to shape a product strategy. So, how to translate model performance into financial outcomes in practice? Let’s figure it out together.

Why AI Needs to Be Practical

Business leaders don’t fund AI projects to explore possibilities. They aim to improve profitability.

When executive teams review quarterly results, they’re looking at specific line items: customer acquisition costs, operational expenses, revenue per user, churn rates, and so on. AI solutions for business that don’t align with these metrics become a waste of budget, regardless of their technical sophistication.

The practical value of AI is outlined by four core capabilities:

- Automation. It reduces labor costs.

- Scoring systems. They improve decision accuracy.

- Precision. It minimizes waste or errors.

- Personalization. It increases conversion rates.

They are what turn AI from an experimental technology into a business tool and define measurable AI ROI for businesses.

High-ROI AI Use Cases in Custom Software Development

Companies achieve measurable P&L impact when they deploy AI in areas with clear cost structures or revenue dependencies. As always, it’s a highly individual story for every particular business. Some use AI for customer retention, while others focus exclusively on internal efficiency. Nevertheless, certain areas tend to demonstrate high value across domains as proven AI use cases for business.

Automation Use Cases

AI-powered document processing eliminates manual data entry across invoices, contracts, insurance claims, and compliance forms. NLP automation for support can handle tier-one queries without human intervention. There’s also workflow automation. It connects the capabilities of business automation with AI across departments.

Personalization & Recommendation Engines

The first thing that comes to mind is personalized content feeds. Ecommerce and media platforms use these systems to surface relevant content. Similarly, B2B SaaS products can personalize dashboards. Another example of this tactic in action is product recommendations. Both facilitate churn prediction and prevention.

AI-powered personalization works for internal functions, too. For example, organizations can set up personalized onboarding sequences to adapt to user role, company size, or behavioral signals. Similarly, dynamic content systems adjust messaging, offers, and interface elements for different user segments and roles.

Scoring & Decision-Making Systems

With AI-based transaction scoring, companies can evaluate payment patterns, user behavior, and contextual signals in real-time. Meanwhile, AI for risk scoring processes credit applications, insurance claims, contract terms, and similar operations. Lead scoring for B2B and SaaS can also be handled with AI. The system is trained to prioritize sales efforts toward prospects that are most likely to convert and retain, turning real-time signals into predictive analytics solutions.

Operational Efficiency

It makes sense to optimize procurement with AI. Next-best-action models automate purchasing decisions and flag best buying windows. AI excels at resource planning thanks to demand forecasting with ML. Intelligent systems can predict capacity needs and schedule staff allocation. The same goes for equipment utilization. SLA time reduction is another viable scenario. AI makes it easier to keep service delivery within contracted timeframes, turning complex operational processes into scalable business automation with AI.

Data & Product Readiness: What You Need Before Implementing AI Modules

AI modules fail not because of algorithm limitations. It’s mainly because the underlying product architecture and data infrastructure for AI are absent. The good news is that it is preventable with proper readiness assessment. To avoid wasting tons of time and money, start by analyzing several critical elements as part of a structured AI implementation strategy.

Architecture Requirements

Thanks to event-driven architecture, AI modules can respond to user actions, system changes, and external triggers in real-time. This approach is foundational for AI in custom software development, as it decouples AI services from core application logic. Models consume events, generate predictions, and trigger downstream actions without blocking primary workflows.

It’s critical to plan for connecting additional ML modules. Integration of ML functionality at any point requires specific API endpoints. They must support both synchronous predictions and asynchronous processing.

Data Collection & Event Design

Effective AI modules require specific event data that captures user intent, context, and outcomes. Before building this functionality, product teams must set up applications to log events that represent the problem space. Each event requires sufficient context to train models.

It’s also critical to consider logging strategies for ML hypothesis testing and implementation. It’s about capturing data even before models exist. Teams should record decision points where AI could eventually provide value, along with the outcomes of those decisions. This historical data becomes the training set when the organization is ready to build AI modules.

Data Quality and Governance

Duplicate records distort model training. Developers need to identify and merge duplicates based on fuzzy matching logic appropriate to the data type. Another aspect is data normalization: standardizing formats, units, and categorical values so models can learn consistent patterns.

Finally, there’s the issue of dataset stability to address. It means maintaining consistent schemas, feature definitions, and data collection methods. Only in this case, predictive analytics solutions and other models trained on historical data remain valid when applied to new data. Breaking changes to the structure require retraining the model to prevent its degradation.

Privacy & Compliance

Integrating AI in custom software development must be approved by legal teams and privacy officers. And it should happen before the development starts. Any post-deployment revision comes with a high probability of rework.

GDPR, DSGVO, and similar regulations demand data minimization. It means organizations must collect only the information necessary for specific purposes and delete everything that is no longer needed. AI systems must use pseudonymization or anonymization where possible and maintain audit trails that document data usage.

One more requirement is role-based access controls. Companies must ensure that only authorized personnel can access training data, model outputs, or personally identifiable information.

Infrastructure for AI

Without reliable pipelines, AI engineers spend excessive time on data preparation instead of model development. Using ETL and ELT patterns enables data movement from operational systems into formats suitable for model training and inference. This foundation is a core part of an effective AI implementation strategy, ensuring that models can be trained, deployed, and scaled without friction.

Feature stores centralize the logic for computing model inputs. They ensure that training and production environments use identical feature definitions. Data warehouses and lakehouse architectures provide the storage layer for both raw data and computed features. They support model training and operation without impacting operational database performance.

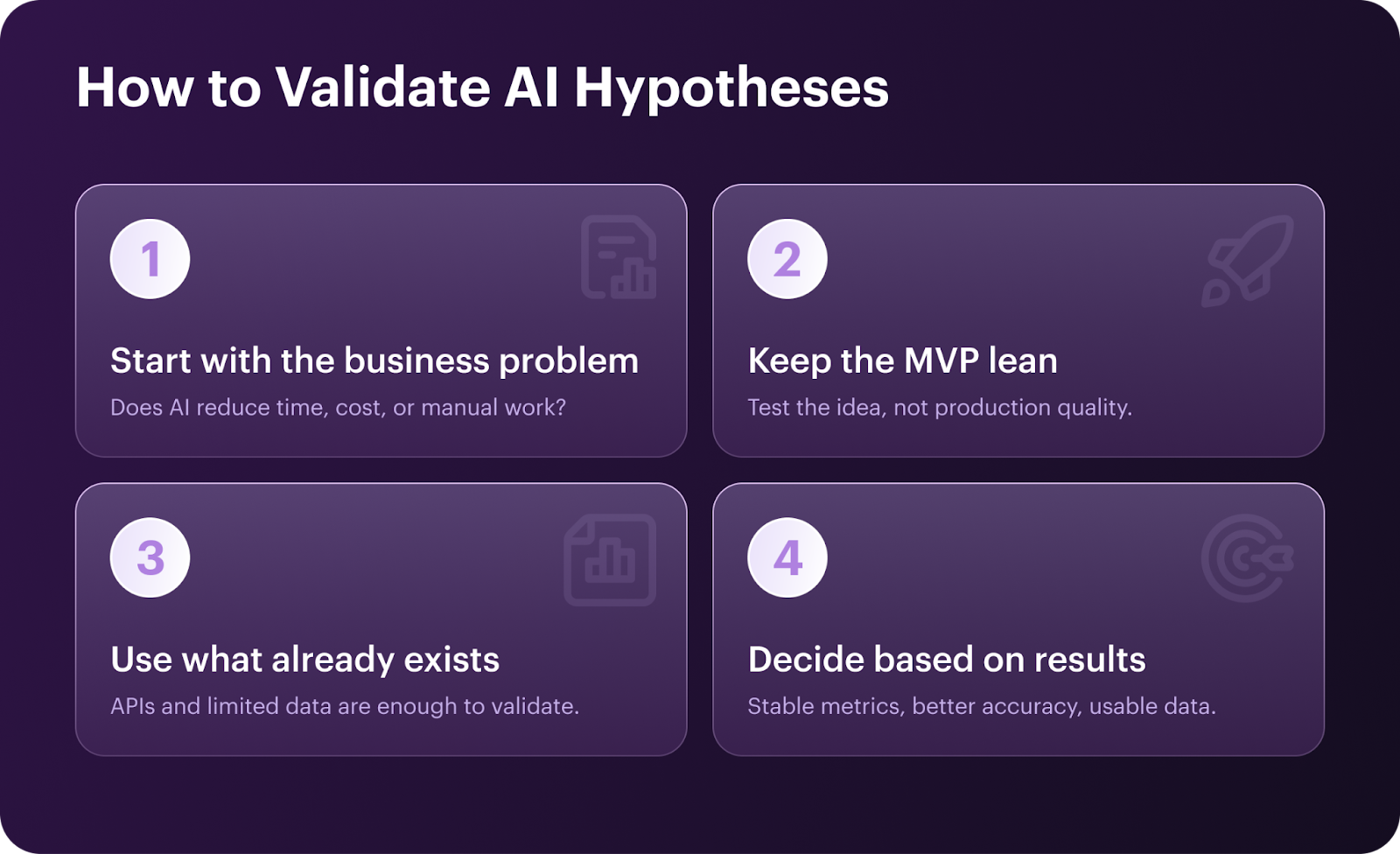

How to Validate AI Hypotheses: Building an MVP with an AI Component

Most AI projects waste resources by building sophisticated models before validating whether AI actually solves the business problem. AI-enabled MVP development exists to test a specific hypothesis. It reveals whether automation reduces costs enough to justify development. We’re not yet at full technical capacity. It’s more of “Will it work when we scale it?”

What an “AI MVP” Actually Means

An AI MVP validates whether AI capabilities deliver measurable business value, not whether you can build a complex model. The goal is to determine whether, for example, automated document processing reduces invoice handling time by 50%. Long story short, teams should focus on outcomes and business impact rather than technical complexity. Success means validating the business case and measurable results through AI-enabled MVP development.

Lean Machine Learning in Product Development

How to test AI without building a whole infrastructure? External models through APIs — OpenAI, Anthropic, Google Cloud AI, AWS — let teams run tests without training custom models. Many AI use cases for business that seem to require custom models can run adequately on general-purpose APIs for validation.

Synthetic data generated from known patterns or augmented from small real datasets enables model training when production data pipelines don’t exist yet. It has limitations, but allows teams to build proof-of-concept models. Meanwhile, simplified features are enough to validate the idea and prove the core value.

When to Move from Prototype to Production

The first sign that signals readiness is metric stability across multiple test periods. It indicates the AI module performs consistently rather than capturing temporary patterns or data artifacts. In AI prototyping for startups, this milestone confirms that the model is learning real signals rather than short-term noise. Another requirement is sufficient accuracy. The AI addition should outperform the existing system (or human team). Production readiness also requires data pipelines that reliably deliver training data and serve predictions at the required latency.

Common Pitfalls of AI MVPs

As always, there are certain tendencies and traps businesses fall into. Here are several practices you should avoid:

- Endless AI prototyping for startups. Set clear benchmarks and success criteria. Limit the number of prototypes to create before moving to full-scale implementation.

- Lack of real data. Teams must validate with actual production data even during MVP phases. It’s okay to use synthetic or non-production data — but not only it.

- Ignoring edge cases. Testing only happy-path scenarios leads to failures later. Unusual inputs, rare user types, system errors, and boundary conditions trigger severe issues.

These points seem logical and obvious. Yet, that’s where many companies tend to make mistakes that undermine the development and motivation to innovate.

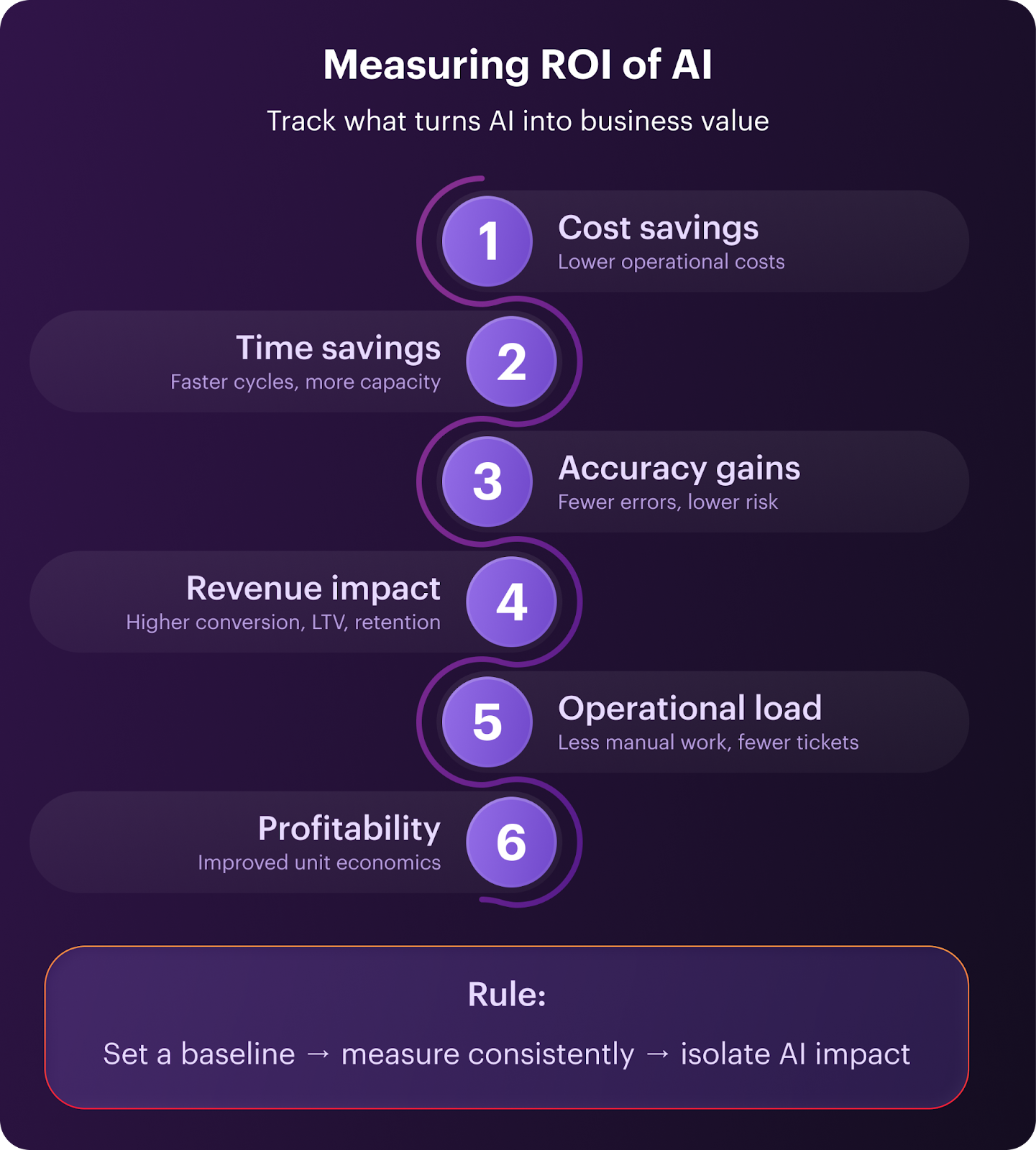

Measuring ROI of AI Initiatives

AI investments require the same financial justification as any other technology spend. To calculate AI ROI for businesses, it’s best to consider both direct economic impacts and operational improvements. Both groups translate to cost avoidance or capacity increases. Here are several ideas regarding what to track:

- Cost savings. Calculate the difference between pre-AI operational costs and post-deployment costs. Account for AI infrastructure and maintenance expenses.

- Time savings. Track how AI reduces cycle times for key processes — document processing, customer onboarding, support resolution. Quantify the capacity gains.

- Accuracy improvements. Measure the financial impact of better decisions. It can be fraud caught, inventory waste reduced, compliance violations prevented, or something else. Compare error rates and their associated costs before and after AI.

- Revenue increases. Track conversion rate improvements, average order value changes, customer lifetime value increases, and churn reduction.

- Operational load reduction. Measure how smarter workflows decrease manual workload — for example, in hours reclaimed or tickets deflected.

- Profitability gains. AI affects unit economics, directly impacting profit margins for each transaction or customer. Look at your specific case and validate results through structured ML hypothesis testing.

Establish baseline metrics before implementation and track changes consistently over time. Isolate AI impact from other variables. The goal isn’t proving AI works in general — it does. It’s about matching the specific AI module to better outcomes enabled by business automation with AI.

Key Takeaways

AI delivers business value when treated as operational infrastructure, not just an experimental technology. Organizations that succeed with AI-based process optimization always start with clear hypotheses about which P&L line items will improve and by how much. Goals can be reassessed as execution begins, but clarity remains essential. The same applies to data: readiness determines AI feasibility more than the algorithm itself. An MVP then validates business hypotheses and informs further investment in technical capability.

If you’re ready to turn AI hypotheses into execution, Darly Solutions can support the process — request a consultation.

FAQ

Readiness depends on three factors and should be evaluated as part of an overall AI implementation strategy. Firstly, there should be a clearly defined business problem with measurable outcomes. Secondly, you need sufficient data to capture the events and context required to train models. Finally, your technical infrastructure should be capable of integrating with AI modules.

AI modules require an event-driven architecture. It’s the one that captures user actions, system changes, and business outcomes with sufficient context for model training.This approach lays the foundation for data-driven solutions by logging not just what happened but why — user identifiers, timestamps, session data, decision contexts, and outcomes. The specific data types required would vary by industry and need.

Connect AI performance to specific financial metrics. It can be cost savings from reduced labor hours or error correction. It can be revenue increases from improved conversion or retention rates. Some experience margin improvements from better resource utilization, fraud detection and anomaly detection. Establish baseline metrics before implementation and track results over time to support data-driven solutions.

The most expensive mistake is building a complete production infrastructure before validating business value and expected AI ROI for businesses. Another common and costly issue is pursuing technical metrics without connection to business outcomes. Indefinite prototyping, tests with synthetic data, ignoring edge cases, and a lack of stakeholder alignment are also in this list.

Connect with us

.webp)

We are a tech partner that delivers ingenious digital solutions, engineering and vertical services for industry leaders powered by vetted talents.

.webp)